I've been thinking about LLMs as "machines for crunching language" for a while now. It's analogous to how calculators are "machines for crunching numbers."

The idea is essentially that they are mushy, semi-predictable, semi-steerable functions that you can do something like:

f(x) = y

Where x is some input text and y is some output text.

We know that, though, right? At least, everybody in tech knows that, to some degree. I think many of the users just see them as "bots" or "AI" that can respond in a human-like way. But, you and I? We know they're a bit more mechanical than that.

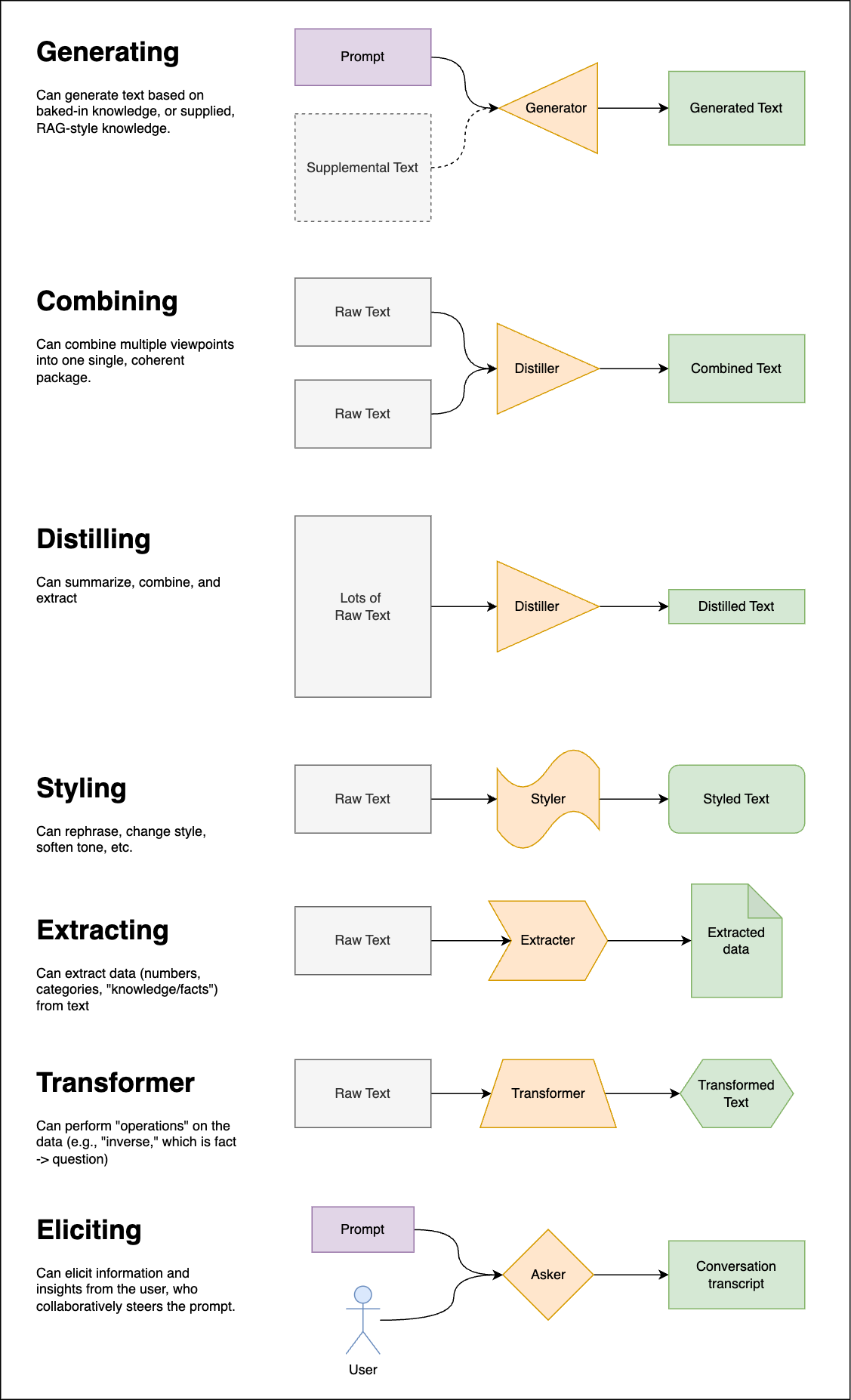

Anyway, having used these things for the better part of a year now, as a low-cost therapist, as a coding assistant, as a text classification tool, as a summarization tool, as a data extraction tool (etc etc), I've gained an appreciation for what they can do, and I've come up with a series of "primitives" that I intend to use as sort of architecture building blocks to build larger, more interesting systems.

Without further ado, here they are:

Quick overview with some descriptions, in case the image is unintelligible for you. I asked ChatGPT 4o to come up with some examples, which I've included.

Generating

Generate text based on baked-in knowledge, or supplied, RAG-style knowledge.

Example: Writing a Story

Input: "Write a short story about a dragon who learns to bake bread."

Output: "Once upon a time in a distant kingdom, there lived a dragon named Drago who loved the smell of freshly baked bread..."

Combining

Combine multiple viewpoints into one single, coherent package.

Example:

Input 1 (Monologue 1): "I believe that remote work is the future. It allows employees to have a better work-life balance, reduces commuting time..."

Input 2 (Monologue 2): "In-office work is essential for fostering collaboration and building strong team relationships. Being in the same physical space encourages spontaneous brainstorming..."

Output (Synthesized Perspective): "Both remote and in-office work have their unique advantages and challenges. Remote work offers better work-life balance... Conversely, in-office work fosters collaboration ... Finding a hybrid approach that balances these benefits while mitigating the challenges might be the optimal solution."

Distilling

Summarize, combine, and extract key points.

Example: Summarizing a Long Article

Input: Lots of Raw Text: A detailed research paper on climate change.

Output: "Climate change is primarily driven by human activities, leading to global warming and severe weather patterns."

Styling

Rephrase, change style, soften tone, etc.

Scenario: Transforming a technical report into a poetic description.

Input (Technical Report): "The solar panels operate at an efficiency of 20%. They convert sunlight into electrical energy, which is then stored in batteries for later use. These panels are most effective during peak sunlight hours."

Output (Poetic Description): "Beneath the golden sun, panels of hope stretch towards the sky, capturing its radiant kiss. At twenty percent, they weave sunlight into threads of energy, storing this luminous gift in waiting vessels. When the sun is at its zenith, their magic is most profound, a dance of light and power."

Extracting

Extract data (numbers, categories, "knowledge/facts") from text.

Example: Extracting data from a product review.

Input (Product Review): "I recently purchased the XYZ Smartphone. It has a 6.5-inch display, 128GB of storage, and a battery life that lasts up to 12 hours. The camera quality is outstanding, with a 48MP sensor that captures stunning photos. However, I found the user interface to be somewhat clunky and not very intuitive."

Output (Extracted Data in JSON Format): { "product_name": "XYZ Smartphone", "features": { "display_size_in": 6.5, "storage_gb": 128, "battery_life_hr": 12, "camera_quality_mp": 48 }, "comments": { "positive": "outstanding camera quality", "negative": "clunky user interface" } }

Transformer

Perform "operations" on the data (e.g., "inverse," which is fact -> question).

Example: Converting Facts to Questions

Input: "The Eiffel Tower is in Paris."

Output: "Where is the Eiffel Tower located?"

Elicitor

Elicit information and insights from the user, who collaboratively steers the prompt.

Example: Conducting a User Interview

Input: "What features do you find most useful in a productivity app?"

Output: Conversation transcript: "User: I find the task scheduling and reminders features most useful because they help me stay organized and on track."

An honorable mention: ChatGPT 4o responded with the following when I asked it to give me a creative example of a "transformer":

Example: Transforming a news headline into a satirical headline.

Input (News Headline): "Government Announces New Environmental Policy to Combat Climate Change"

Output (Satirical Headline): "Government Vows to Fight Climate Change with Paper Straws and Good Intentions"

That feels like it sits somewhere between "transformer" and "styler." I will probably spend an afternoon asking 4o for more examples and to help me design some more systems with this... fodder for some future posts.

Anyway, hopefully you have a sense of what these are and why this lens might be useful. The point is that when we interact with LLMs via chat interfaces, we're often getting it to do some combination of all of these. It works well, most of the time, but it's not cleanly repeatable.

With these primitives, we can design and structure systems based on the different features/capabilities of the foundational models (which we can tune and tweak independently with prompts and pipelines).

And I have a pet theory that if we build platforms that let us do this in a way that users can pull open the hood and tweak, test, and re-run, share/exchange the prompts that we build with, we'll get way better engagement, way deeper insights into user behavior, and way better prompts (through democratic evolution that open platforms provide).

Long story short, I'm going to be building such a platform in short order. I'm really passionate about hackable software, and "prompt engineering" is the perfect way to indoctrinate a new wave of hackers to the fold. This is big.

A simple example

Here's an example flow that I built. Take a look, and tell me what you think. Does it make sense? Seem silly? Am I missing anything obvious?

What this is missing is the APIs/interfaces that these things would need to plug into. For this specific flow (which I want to test drive with that platform I want to build), I would need some kind of "admin" interface for a coach to do the lesson plan design, and then a "group chat" interface for the user to interact with the Asker in a supervised way.

In this kind of chat, the user would see the initial prompt and could tweak or quote or steer the conversation in any way they see fit. The coach could come in and participate as well, and star or react to messages which would get tagged for later processing. The whole conversation would be associated with the user's data model, and could be used to build generic insights for future lessons and marketing, as well as specific knowledge which could be folded into that student's context for future lessons.

And in Joshua's ethical world of AI applications, the user's collected data would be fully visible and editable to the user, included the "extracted, anonymized data."

It seems like OpenAI is already experimenting with this in a limited extent with their "memories," although I can't edit it, and I can't see how they are training the model based on my chats.

Anyway, this is my current system for modeling "AI pipelines."

Request/prompts for comments:

What do you think?

Does it make sense?

Does it seem useful? Not useful?

Is someone else already working on something like this?

Do you have specific suggestions for names, new primitives, or their designs?

This is just a first pass, so any and all feedback is welcome!

This is amazing Joshua. Looking forward to the build